!pip -q install instaloader |████████████████████████████████| 60 kB 3.0 MB/s eta 0:00:011

Building wheel for instaloader (setup.py) ... doneInstagram offers two ways of image sharing: permanent posts and ephemeral stories. In this chapter I will offer three approaches for collecting posts: Instaloader, CrowdTangle, and Zeeschuimer.

Posts are shaped by several affordances and contain different type of media: least one image or video, often paired with text (captions). Posts may also contain an album consisting of more than one image or video. Captions may contain hashtags and / or mentions. Hashtags are used to self-organize posts on the platform, users can subscribe to hashtags and search for them. Mentions are used to link a post to another profile. Moreover, users can like, share and comment posts. Some data-collection approaches, like CrowdTangle, offer access to one image and post metrics, like the comment and like count. Instaloader, offer access to all images / videos, while being the legally most questionable approach. And then there’s the middle ground: Zeeschuimer (optionally in connection with 4CAT).

Through the following subchapters I will try to illuminate the advantages of each collection methods. For each method I will provide a manual to follow in order to collect metadata and the actual media for Instagram posts.

As of November 2024 I recommend to collect posts using Zeeschuimer or the official Meta Content Library. Our preferred approach for the 2024/25 winter semester is the combination Zeeschuimer + Import Notebook where we import the ndjson created by Zeeschuimer into a custom notebook and convert the data and download images and videos. An updated notebook for downloading album posts will be added shortly.

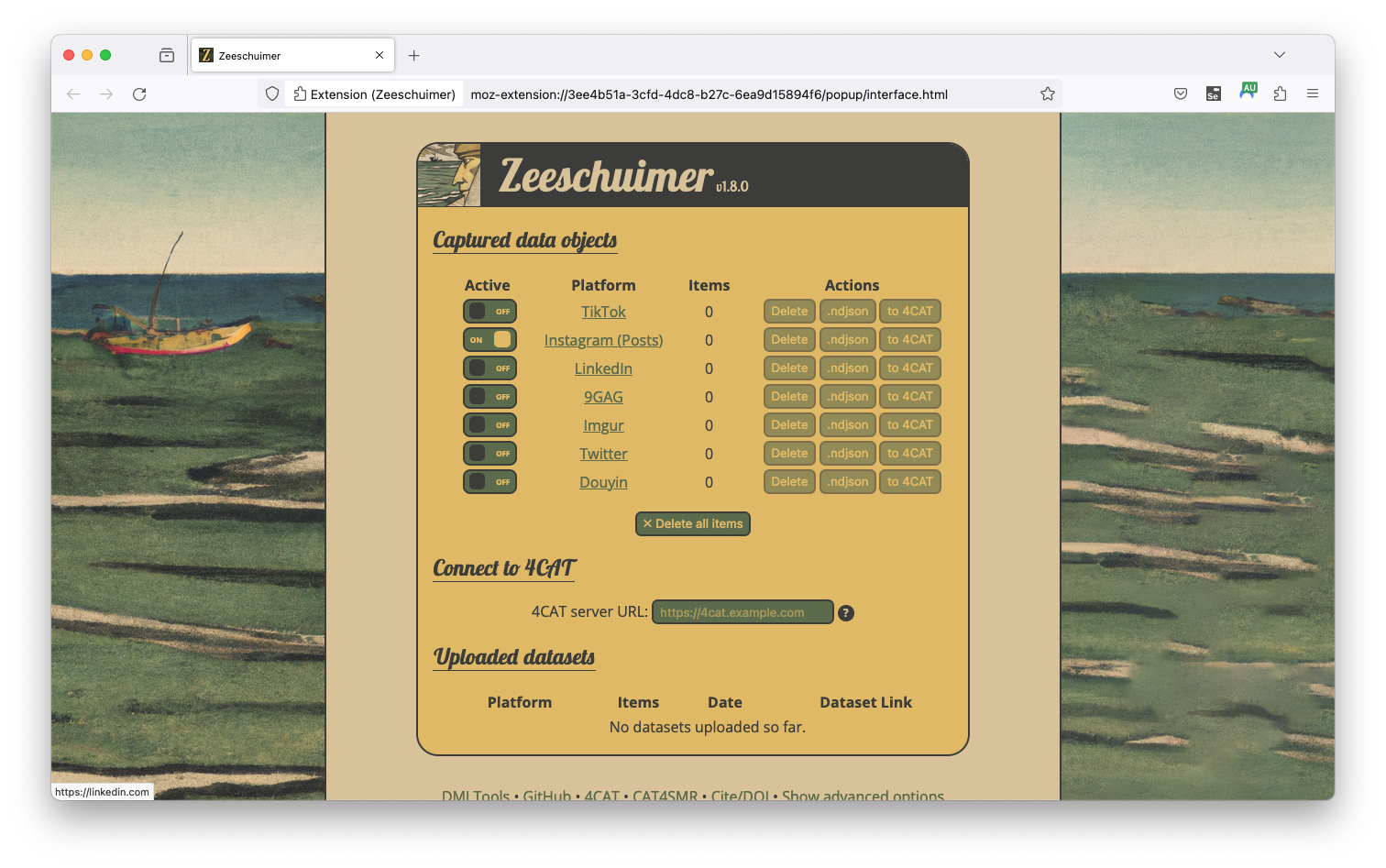

Zeeschuimer (Peeters, n.d.) and 4CAT (Peeters, Hagen, and Wahl, n.d.) are two tools developed for the https://wiki.digitalmethods.net/. The first is a firefox plugin that captures traffic when browsing websites likes Instagram or TikTok. The second, 4CAT, is an analysis platform incorporating several steps of preprocessing and further analyses. For post collection we can use the original Zeeschuimer Firefox Plugin, download the latest release from GitHub and install it in Firefox. To download Instagram posts using Zeeschuimer follow these steps (* steps are only necessary when working with 4CAT):

https://4cat.digitalhumanities.io/).Data collected using Zeeschuimer can also be exported as ndjson files. The Zeeschuimer Import Notebook provides a code example for reading the files and converting them to either 4CAT format, or a table format compatible with the above notebooks for CrowdTangle and instaloader.

The old version of the Notebook still exists. This version downloads only one image per post.

This is our preferred approach for the research seminar. We take a closer look at the Data Structure and Preprocessing of Zeeschuimer data through custom notebooks over the course of the next weeks.

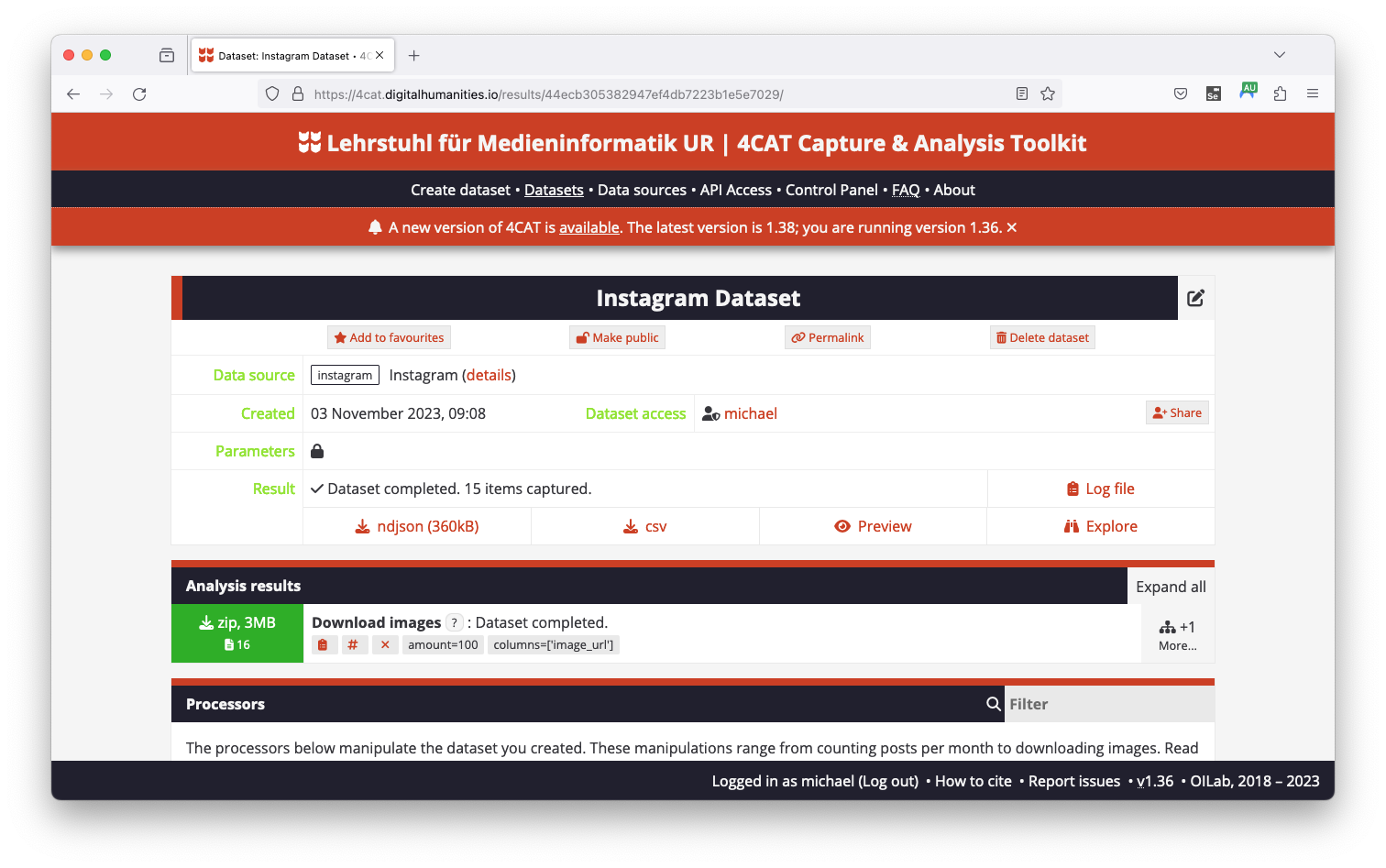

4CAT is a tool developed by the Digital Methods Initiative. The collected data can be exported to 4CAT with only the click of a button. After successfully importing the post data, the tools offers several modules. At first, download the images associated with each post with the Download images module at the bottom. Select image_url in the options tab and hit Run.

Once the images have been downloaded more analysis options are available when clicking the More button on the right. Further, you may download images as a ZIP file and can export the posts from 4CAT in CSV format. Repeat the process with the Download Video function to access posted videos. We will be able to use the collected data using the CSV export and the media files provided in the ZIP packages. Additionally, each ZIP file contains a .metadata.json file which we may use to map filenames to media files.

The authors of Zeeschuimer and 4CAT have published a manual here.

Instaloader is a python package for downloading instagram pictures and videos along with their metadata. I have written a getting started tutorial on Medium. It is, together with the provided notebook, the basis for this chapter.

Instaloader is a stand-alone piece of software: It offers options to download most Instagram content, like posts and stories, through different strategies, e.g. lists of profiles or by hashtag. For complex tasks I recommend to call instaloader from terminal, see the documentation for more information.

In order to download posts and stories from Instagram, we use the package instaloader. You can install package for python using pip install <package>, the command -q minimizes the output.

|████████████████████████████████| 60 kB 3.0 MB/s eta 0:00:011

Building wheel for instaloader (setup.py) ... doneOnce you install instaloader we log in using your username and password. Session information (not your credentials!) is stored in Google Drive to minimize the need for signing in.

In order to minimize the risk for your account to be disabled we suggest creating a new account on your phone before proceeding!

username = 'your.username'

# We save the sessionfile to the following directory. Default is the new folder `.instaloader` in your google drive. (This is optional)

session_directory = '/content/drive/MyDrive/.instaloader/'

import instaloader

from os.path import exists

from pathlib import Path

# Creating session directory, if it does not exists yet

Path(session_directory).mkdir(parents=True, exist_ok=True)

filename = "{}session-{}".format(session_directory, username)

sessionfile = Path(filename)

# Get instance

L = instaloader.Instaloader(compress_json=False)

# Check if sessionfile exists. If so load session,

# else login interactively

if exists(sessionfile):

L.load_session_from_file(username, sessionfile)

else:

L.interactive_login(username)

L.save_session_to_file(sessionfile)Loaded session from /content/drive/MyDrive/.instaloader/session-mi_sm_lab05.Next, we try to download all posts of a profile. Provide a username and folder:

dest_username = 'some.profile'

dest_dir = '/content/drive/MyDrive/insta-posts/' # Once more we save the files to Google Drive. Replace this with a local directory if necessary.

t = Path("{}{}".format(dest_dir, dest_username))

t.mkdir(parents=True, exist_ok=True)

profile = instaloader.Profile.from_username(L.context, dest_username)

for post in profile.get_posts():

L.download_post(post, target=t)Well, you just downloaded your first posts! Open Google Drive and check the folder insta-posts/ (or whatever folder you chose above)! There should be three files for each post, the image, a .json file and a .txt file. The .txt includes the image caption, the .json lots of metadata about the post.

The next cell reads all .json files of the downloaded posts. Then we browse through some interesting data.

Ok, now all metadata for all posts is saved to the variable json_data. Run the next line and copy its output to http://jsonviewer.stack.hu/. Your output should look similar, go ahead and play around to explore your data! What information can you extract?

Posts contain plenty of data, like time and location of the post, the authoring user, a caption, tagged users and more. The following cells demonstrate how to normalize the data into a table format, which is useful when working with pandas. Nevertheless, this is optional!

# Use booleans (True / False) values to select what type of data you'd like to analyse.

username = True #@param {type:"boolean"}

timestamp = True #@param {type:"boolean"}

caption = True #@param {type:"boolean"}

location = True #@param {type:"boolean"}

shortcode = True #@param {type:"boolean"}

id = True #@param {type:"boolean"}

tagged_users = True #@param {type:"boolean"}Next we loop through the data and create a new pandas DataFrame. The DataFrame will have one column for each variable selected above and one row for each downloaded posts.

If you are not yet familiar with the concept of dataframes have a look at YouTube, there’s plenty of introductory videos available.

import pandas as pd

posts = [] # Initializing an empty list for all posts

for post in tqdm(json_data):

row = {} # Initializing an empty row for the post

node = post.get("node")

if username:

owner = node.get("owner")

row['username'] = owner.get("username")

if timestamp:

row['timestamp'] = node.get("taken_at_timestamp")

if location:

l = node.get("location", None)

if l:

row['location'] = l.get("name")

if shortcode:

row['shortcode'] = node.get("shortcode")

if id:

row['id'] = node.get("id")

if tagged_users:

pass

if caption:

c = ""

emtc = node.get("edge_media_to_caption")

edges = emtc.get("edges")

for element in edges:

caption_node = element.get("node")

c = c + caption_node.get("text")

row['caption'] = c

# Finally add row to posts

posts.append(row)

# After looping through all posts create data frame from list

posts_df = pd.DataFrame.from_dict(posts)Now all information selected above is saved to the dataframe posts_df. Run the next cell and it will return a nicely formatted table. If your data is quite long, output will be cropped. Click the wand and after a few seconds you are able to browse through the data or filter by columns

In order to get a first impression of dataframes, the head() method is also useful. Run the next cell to see the result

The dataframe is only saved in memory, thus when disconnecting and deleting the runtime, the dataframe is lost. Running the next cell saves the table to a CSV-file on your drive.

Now the processed data may be recovered or used in another notebook.

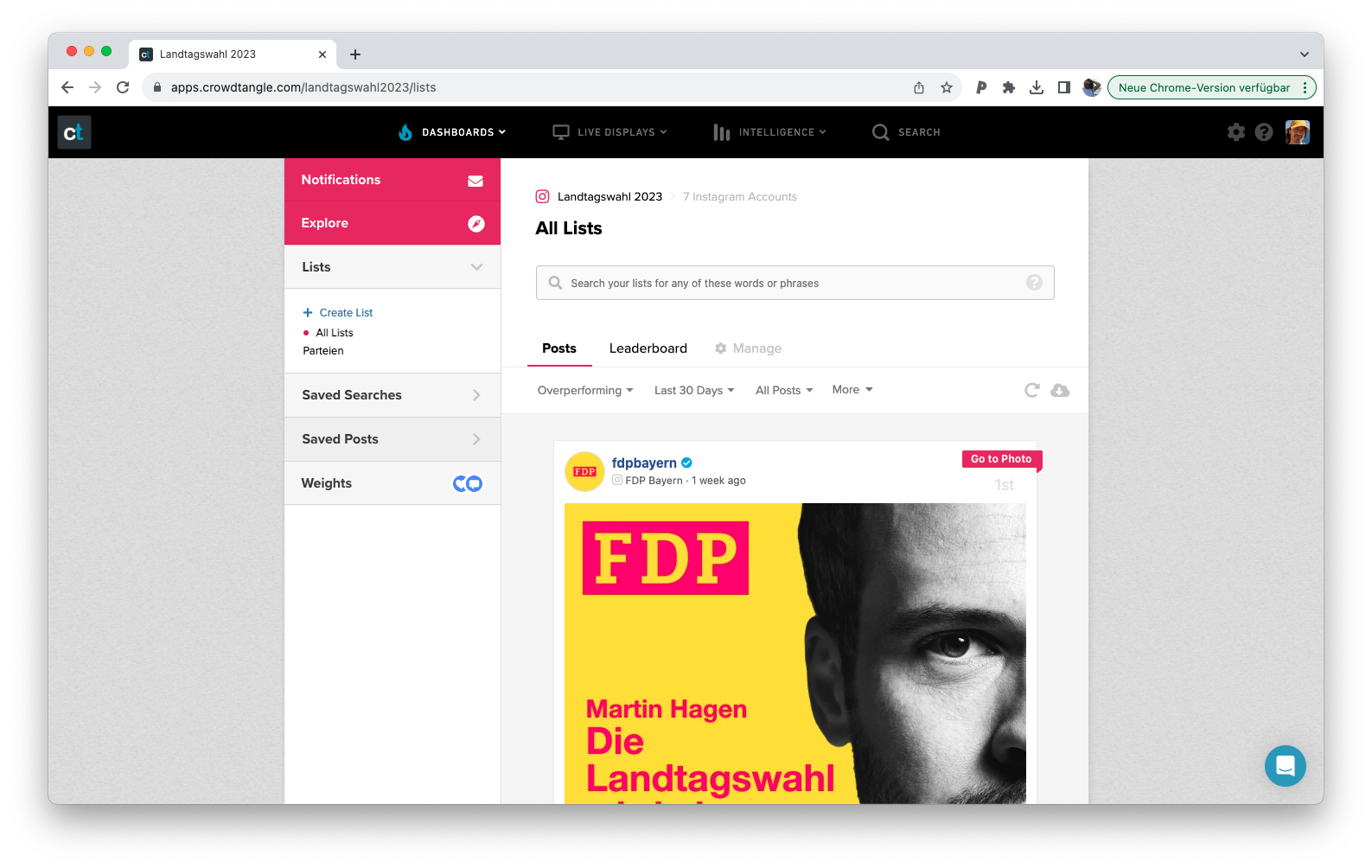

Meta shut down CrowdTangle in August 2024. The tool has been replaced by the Meta Content Library. Researchers can apply for access, a third party now moderates access, the Inter-university Consortium for Political and Social Research (ICPSR). I will update this page shortly.

CrowdTangle is the best option to collect IG posts – in theory. It provides legal access to Instagram data and offers several tools to export large amount of data. For a current project we’ve exported more than 500.000 public posts through a hashtag query. Unfortunately there are several restrictions: CrowdTangle is the best tool to export metadata of public posts, and captions. The abilty to collect images through the platform is limited: Image links expire after a certain amount of time, thus we need to use some makeshift approach to download the images. When we can download the images, it’s always just one per post, no matter if it’s a gallery or a single image. And let’s not talk about videos. I have written another Medium story with a step-by-step guide to CrowdTangle.

@online{achmann-denkler2024,

author = {Achmann-Denkler, Michael},

title = {Instagram {Posts}},

date = {2024-11-14},

url = {https://social-media-lab.net/data-collection/ig-posts.html},

doi = {10.5281/zenodo.10039756},

langid = {en}

}